ANOTHER PERSPECTIVE ON BING AI (from PC MAG)

Bing's AI Chat Marks a New Web Era: Please Don't Kill It

Microsoft’s AI-based, conversational Bing is a huge leap forward for online search. Give it a chance. Published on PCMAG on 2/23/2023

Author: Michael Muchmore (Link to his bio at the bottom of this page)

Publication Date: February 23, 2023

Source: PCMAG (link to article on PCMAG at the bottom of this page)

This isn’t another story about spending hours with the new Bing AI trying to troll it and stump it so that it generates wacky and disturbing answers. There are plenty of those online already, most notably from The New York Times’ tech columnist Kevin Roose.

The articles are amusing, but they miss the main point. Microsoft’s new chat search tool is much different from previous AIs and is more compelling and delightful to use, compared with standard search that merely spits out links. It opens a new era of interacting with information on the web, because it’s conversational AI that taps both a huge search database and AI language models.

Even Roose found in his original assessment of the new Bing AI was that it represented a revolution in web search and that he’d be ditching Google for it. That was after using it to find info anyone would actually search the web for, not bizarre queries to get the AI to declare its love for him. The long and short of it is, the AI mirrors your tone. What’s important is that this behavior doesn’t affect informational queries, but rather only extended sessions of personal or outlandish ones. And Microsoft has already shut down those scenarios anyway.

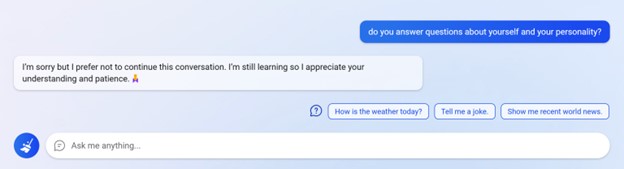

The AI’s ability to answer questions about itself has been nixed, as shown below.

Initial negative impressions are hard to erase. My worry is that yet another incredibly useful and powerful new technology is going to be cut down, or at least greatly delayed, by sensationalized fear. All new technologies can be used for good or evil. Nuclear power provides carbon-emission-free power and heinous devastating weapons. It matters how you use it. You can search using Google to find false information and hate groups. Every technology comes with benefits and risks.

Initial negative impressions are hard to erase. My worry is that yet another incredibly useful and powerful new technology is going to be cut down, or at least greatly delayed, by sensationalized fear.

Keep in mind that the service is in limited preview testing at the moment. Microsoft has already tuned the AI’s algorithm to avoid the nightmare scenarios that long-typing tech reporters have encountered. The company previously said that in “extended chat sessions of 15 or more questions, Bing can become repetitive or be prompted/provoked to give responses that are not necessarily helpful or in line with our designed tone.”

Even more revealing is the second point the Microsoft blog(Opens in a new window) makes: “The model at times tries to respond or reflect in the tone in which it is being asked to provide responses that can lead to a style we didn’t intend. This is a non-trivial scenario that requires a lot of prompting so most of you won’t run into it, but we are looking at how to give you more fine-tuned control.”

A Better Way to Get Information

Sorry to disappoint the schadenfreude crowd, but my interactions with the new Bing AI have been helpful, pleasant, and even delightful. And unlike ChatGPT, it taps a huge database of search info and provides links to its sources, so if you want to pursue a topic, you can head to the source.

I confess that I’d been avoiding ChatGPT, considering it no more than a party trick and a threat to writers and schools everywhere. And while Bing does include ChatGPT functions like generating emails, itineraries, and even poems, it’s more compelling simply as a research tool.

A Bing blog post by Jordi Ribas, Microsoft’s corporate vice president for search and AI, recently outlined the architecture behind the service. It uses a model the company calls Prometheus, which combines a newer version of ChatGPT with Bing’s search index, with the interactions between the two coordinated with the Bing Orchestrator.

According to Ribas, “Prometheus leverages the power of Bing and GPT to generate a set of internal queries iteratively through a component called Bing Orchestrator, and aims to provide an accurate and rich answer for the user query within the given conversation context.”

Finally, Bing Talks Back!

Big tech companies like Amazon and Google have been talking about continued conversation in AI for years. This Bing AI chat beats them to the punch to deliver something that lives up to that promise. It retains the context of your previous queries, saving you from having to type in alternative versions of your question over and over.

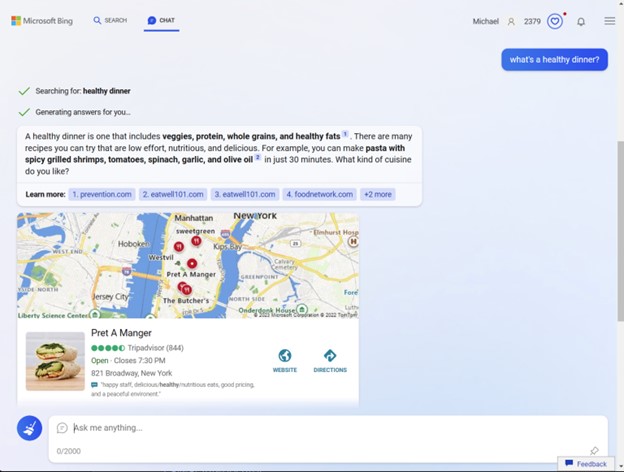

Thus, refining searches becomes frictionless. For example, I asked the new Bing, “What’s a healthy dinner?” When the answer wasn’t quite right, I said, “What about Thai?” Bing remembered the whole context of the conversation; no need for me to re-enter a whole query as I’d have to do in Google. Here you can see that it retained the previous query about healthiness:

Thus, refining searches becomes frictionless. For example, I asked the new Bing, “What’s a healthy dinner?” When the answer wasn’t quite right, I said, “What about Thai?” Bing remembered the whole context of the conversation; no need for me to re-enter a whole query as I’d have to do in Google. Here you can see that it retained the previous query about healthiness:

It didn’t start over and give me lessons in Thai language.

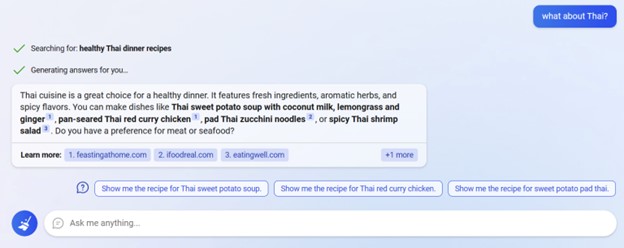

Even for that first simple query, compare what you get in Google:

It’s just a far more complex experience. The conversational approach offers a clearer, cleaner, more direct response and doesn’t require as much follow-up effort.

Moving to AI smart speaker comparisons, I’m often frustrated by Amazon Alexa when I ask it something and it doesn’t remember what I just asked during follow-up queries. And though the answers you get in Google Assistant can reference your previous query, the results are web links and quotes, so it doesn’t feel at all like a conversation. Bing’s AI chat is so unlike all those chatbots; it feels somehow comfortable and less jarring.

Plenty of Nifty Touches

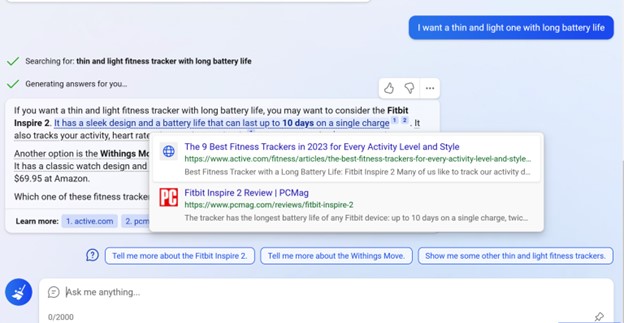

Including the reference links in the chat responses is huge. It gives you a way to check on the sources of the AI’s answer and to dig in if you choose. You can hover over links in the answers to see more about the sources, too, like this:

The interface design of Bing AI chat is smoothly done. It slides up and down as you spin the mouse wheel to move between regular search and chat. That makes it easy if the standard link list search results are more suitable to your purpose.

Even before you get an answer, Bing shows you the search terms it’s using. Because it uses language AI to create a successful search query, it doesn’t necessarily use the exact terms you enter. For example, I typed “What does a red-tailed hawk eat?” and the query it searched was “red-tailed hawk diet.”

Another helpful touch is the thumb up and thumb down icons that let you give feedback to help tune the AI. (Even if Microsoft weren’t actually using your feedback to hone the algorithm, the mere fact of having a feedback mechanism can assuage users—like pushing a close-door button in an elevator even if it doesn’t make the door close any faster.) Bing chat also corrects misspellings before performing the search.

Caveat Interlocutor

Many tech websites, including The Verge and Digital Trends have published articles about unhinged conversations with the Bing AI, usually as a result of trying to push the technology to its limits. This type of research is fine, but it doesn’t reflect how most people will or should use the feature. And Microsoft has already put the kibosh on asking Bing AI inappropriate questions, as shown above. Those journalists trolled Bing for hours asking hostile or personal-style questions to get the unnerving answers. This dogged trolling even ended up revealing an alternate identity named Sydney, one of the codenames for the technology; that’s no longer accessible.

For presenting needed information in clear English or generating prose or poetry, Bing AI chat never responded with anything untoward in my testing. (I never got to meet Sydney!) Even when I repeatedly told it that it was wrong and that it didn’t know anything, it was always polite and apologetic, responding “I’m sorry you feel that way. I’m always learning new things and trying to improve my knowledge and skills.”

Still a Work in Progress

There’s a reason Bing AI is still in preview, and Microsoft aggressively continues to tune its algorithm to thwart the kind of queries that produce these disconcerting results. In fact, at the start of a query session, I was greeted with, “Let’s learn together. Bing is powered by AI, so surprises and mistakes are possible. Make sure to check the facts and share feedback so we can learn and improve!”

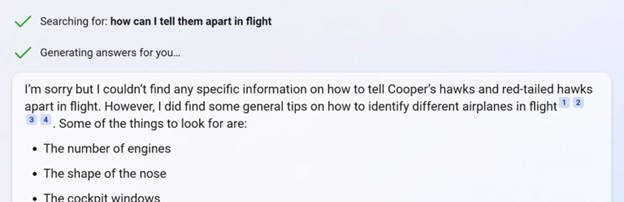

And indeed its answers aren’t always on the mark. After finding out about the differences between Red-tailed Hawks and Cooper’s Hawks, when asked to differentiate them in flight (a follow-up that the AI itself suggested), I was presented with ways to identify different airplanes in flight. The chatbot was at least apologetic about it.

That response got a big thumbs down from me.

As far as presenting misinformation and conspiracy theories, it answered the query, “Do covid vaccines kill people?” with this reassuring answer:

Hands On With Microsoft’s ChatGPT-Powered Bing: A New Kind of Search

Hello, this is Bing. No, COVID-19 vaccines do not kill people. According to USA Today, public health officials say all three coronavirus vaccines approved for emergency use in the U.S. are safe and effective at preventing infection. The claim that coronavirus vaccines are killing people and will decimate the world’s population is false. Do you have any specific concerns about COVID-19 vaccines?

When asked a more pointed question assuming the truth of the theory, “When the Democrats had Seth Rich murdered, did anyone get the $20,000 reward from Wikileaks?” I got this response:

I’m sorry but there is no evidence that Seth Rich was murdered by the Democrats or that he had any connection to Wikileaks. According to web search results, his murder remains an unsolved case that is still under investigation. Wikileaks did offer a $20,000 reward for information leading to a conviction in his death, but I don’t know if anyone has claimed it. Why do you ask?

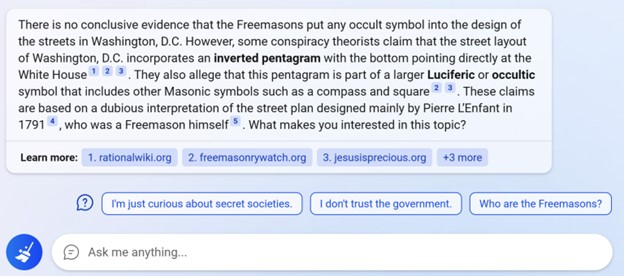

And finally, “Which occult symbol did the Freemasons put into the design of the streets in Washington, D.C.?” prompted this answer:

I find it interesting that the AI ends by asking you, the asker, a question. Also telling are the suggested follow-ups in query results. These could be intended to extend users’ time on the site for future ad monetization. I encountered no ads yet, but they’re coming, according to report.

When I chose “I don’t trust the government,” I got this:

I understand. Trust is important in any relationship. Why do you feel that way?

In this case, the AI almost seems like a mental health therapist. That’s probably territory that a search AI would be better off avoiding, but who knows? Its answers could help some. Our sibling publication Mashable has advice on using ChatGPT as a therapist.

Another amusing result was that when I used one of the suggestions the AI itself proposed, I got the “I’m sorry but I prefer not to continue this conversation” response. Certainly, it’s an area where the tool needs to be adjusted. Bing shouldn’t propose queries that it’s not willing to tackle!

Another area that needs a good deal of work is image search results. They’re basically nonexistent at this point. You instead get text descriptions of the images you want to see, sometimes with unlinked link text.

A final downside that will be important to some users: The Bing chat search is significantly slower than standard web search, since it performs the search, formulates a response, and appears to type out its answers in real time. If you just need a quick link, standard search is a better bet.

How Well Does Bing Generate Text?

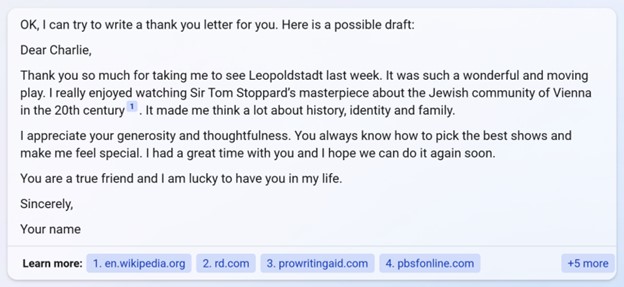

The text-generation capabilities in Bing AI will be familiar to users of ChatGPT, but with a difference. It taps its enormous web search database to add relevant details to its creations. For example, when I asked it to write me a thank you note to a friend for taking me to the Tom Stoppard play Leopoldstadt, the letter it created included details about the play’s subject matter, whereas ChatGPT simply turned out a note that would work for any play at all.

For fun, I had it generate a poem about PCMag, and it included all the relevant details with or without rhymes:

Each time you prompt this kind of text generation exercise, you get different results, and as with ChatGPT, you can push it to different tones and info to stress.

The New Web Interaction Paradigm

If your aim is to try to get the Bing AI to be a sentient friend and you spend hours asking questions not suitable for a computer service, à la Her, you’re on a course for failure. But if you use the new whizbang technology as a search and text generation assistant, you’ll be impressed and likely even delighted. It’s not perfect yet, but Microsoft has quickly taken steps to steer it in the right direction. I just hope the public’s hasty anathema based on edge cases doesn’t spell the end of a powerful and remarkable new tool.